On my podcast this week, I took a closer look at OpenAI’s new video generation model, Sora 2, which can turn simple text descriptions into impressively realistic videos. If you type in the prompt “a man rides a horse which is on another horse,” for example, you get, well, this:

AI video generation is both technically interesting and ethically worrisome in all the ways you might expect. But there’s another element of this story that’s worth highlighting: OpenAI accompanied the release of their new Sora 2 model with a new “social iOS app” called simply Sora.

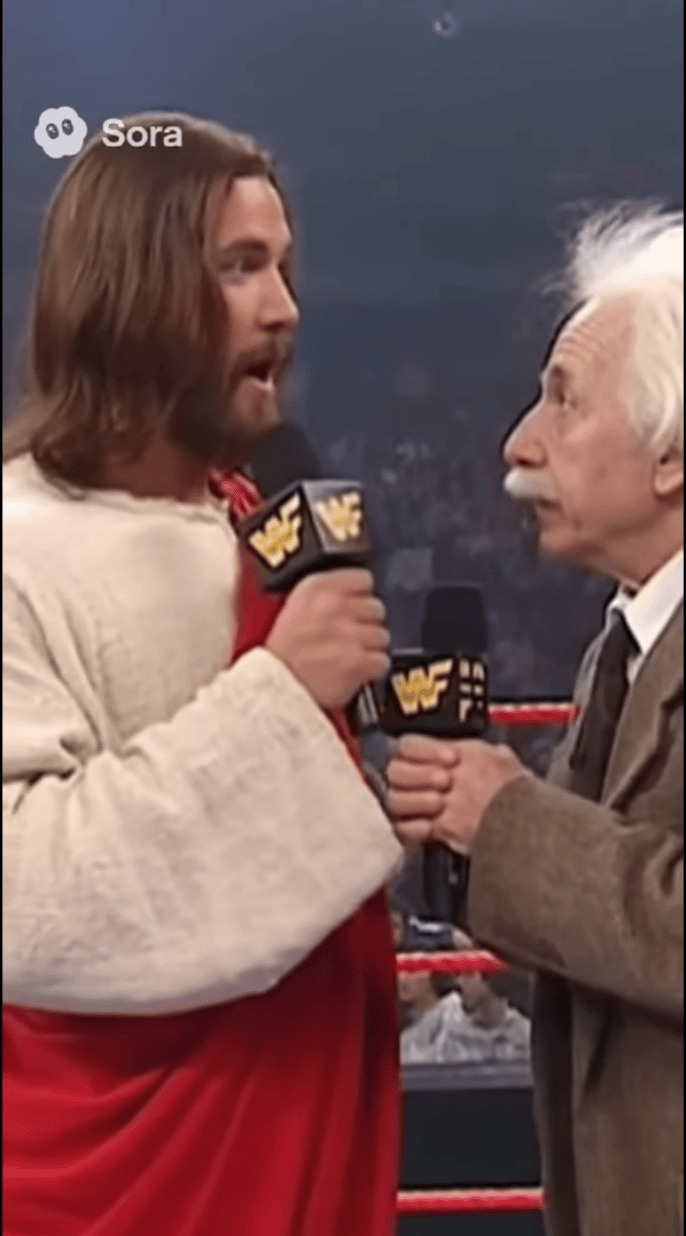

This app, clearly inspired by TikTok, makes it easy for users to quickly generate short videos based on text descriptions and consume others’ creations through an algorithmically curated feed. The videos flying around this new platform are as outrageously stupid or morally suspect as you might have guessed; e.g.,

Or,

The Sora app, in other words, takes the already purified engagement that fuels TikTok and removes any last vestiges of human agency, resulting in an artificial high-octane slop.

It’s unclear whether this app will last. One major issue is the back-end expense of producing these videos. For now, OpenAI requires a paid ChatGPT Plus account to generate your own content. At the $20 tier, you can pump out up to 50 low-resolution videos per month. For a whopping $200 a month, you can generate more videos at higher resolutions. None of this compares favorably to competitors like TikTok, which are exponentially cheaper to operate and can therefore not only remain truly free for all users, but actually pay their creators.

Whether Sora lasts or not, however, is somewhat beside the point. What catches my attention most is that OpenAI released this app in the first place.

It wasn’t that long ago that Sam Altman was still comparing the release of GPT-5 to the testing of the first atomic bomb, and many commentators took Dario Amodei at his word when he proclaimed 50% of white collar jobs might soon be automated by LLM-based tools.

A company that still believes that its technology was imminently going to run large swathes of the economy, and would be so powerful as to reconfigure our experience of the world as we know it, wouldn’t be seeking to make a quick buck selling ads against deep fake videos of historical figures wrestling. They also wouldn’t be entertaining the idea, as Altman did last week, that they might soon start offering an age-gated version of ChatGPT so that adults could enjoy AI-generated “erotica.”

To me, these are the acts of a company that poured tens of billions of investment dollars into creating what they hoped would be the most consequential invention in modern history, only to finally realize that what they wrought, although very cool and powerful, isn’t powerful enough on its own to deliver a new world all at once.

In his famous 2021 essay, “Moore’s Law for Everything,” Altman made the following grandiose prediction:

“My work at OpenAI reminds me every day about the magnitude of the socioeconomic change that is coming sooner than most people believe. Software that can think and learn will do more and more of the work that people now do. Even more power will shift from labor to capital. If public policy doesn’t adapt accordingly, most people will end up worse off than they are today.”

Four years later, he’s betting his company on its ability to sell ads against AI slop and computer-generated pornography. Don’t be distracted by the hype. This shift matters.

When I find myself rooting for TikTok over another app I know that something has gone deeply wrong.