Obsessing About Selection

I’m currently trying to solve a fun problem that’s captured my attention and refuses to relent. Here’s the basic setup:

- A collection of k devices arrive at a shared channel. Each device has a message to send.

- Time proceeds in synchronized rounds. If more than one device tries to send a message on the channel during the same round, there’s a collision and all devices receive a collision notification instead of a message.

- The devices do not know k.

In this setup, a classic problem (sometimes called k-selection) is devising a distributed algorithm that allows all k devices to successfully broadcast in a minimum number of rounds. The best known randomized solutions to this problem require a*k rounds (plus some lower order factors), for a small constant a > 2.

What I am trying to show is that such a constant is necessary. That is: all distributed algorithms require at least b*k rounds for some constant b bounded away from 1 (and hopefully close to 2).

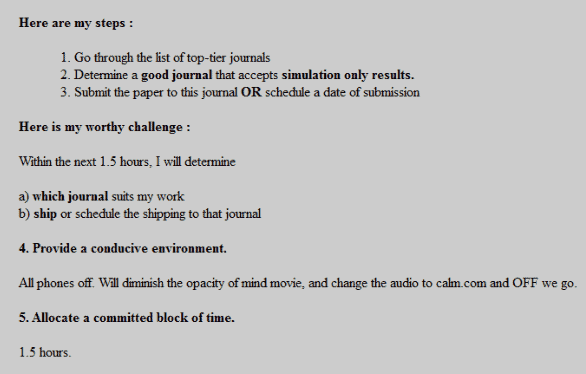

The Dash Method

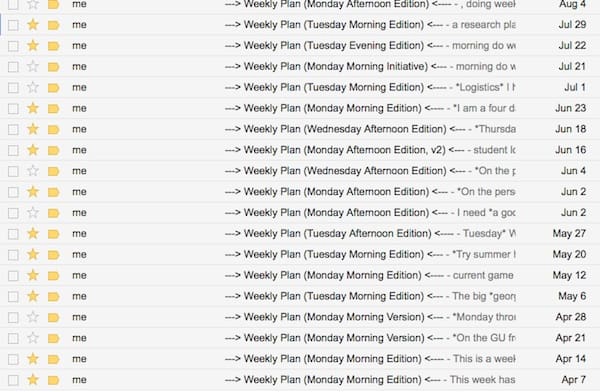

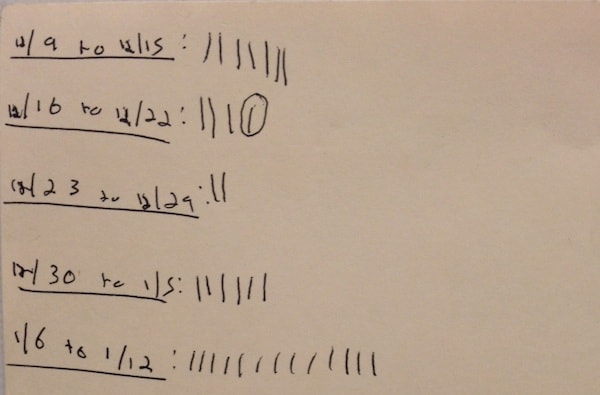

What I’ve noticed in my thinking about this problem over the past week or two is that at the beginning of each deep work session, I’ll typically come up with a novel approach to attempt. As I persist in the session, however, the rate of novelty decreases. After thirty minutes or so of work I tend to devolve into a cycle where I’m rehashing the same old ideas again and again.

I’m starting to wonder, therefore, if this specific type of deep work, where you’re trying to find a creative insight needed to unlock a problem, is best served by multiple small dashes of deep work as oppose to a small number of longer sessions.

That is, given five free hours during a given week, it might be better to do ten 30-minute dashes as oppose to one 5 hour slog.

Lounging in Lauinger

Lounging in Lauinger